Data Lakes × Agentic AI: Building the Autonomous Enterprise of Tomorrow | Part4

We’ve journeyed from concept to construction: Part 1 showed why data lakes are the oxygen of Agentic AI, Part 2 mapped how to architect a lakehouse that scales, and Part 3 drilled into the operational muscle that keeps autonomous systems sharp and trustworthy. Now we lift our gaze to the horizon.

Part 4 is a guided tour of the next decade - where federated “lake meshes,” vector-native storage, quantum-assisted analytics, and privacy-preserving synthetic realities transform today’s best practices into tomorrow’s baseline. Think of it as binoculars for your data strategy: you’ll glimpse the technologies, governance models, and sustainability imperatives already gathering on the edge of mainstream adoption.

Whether you’re plotting a three-year roadmap or simply chasing that next competitive edge, this final installment will help you anticipate the shifts instead of scrambling to react. Let’s step into the future and see how today’s lakehouse foundations evolve into the autonomous nervous system of the enterprise.

The Rise of Domain-Driven Lake Meshes

Federated Ownership Replaces Central Bottlenecks

Over the next few years, centralized data-platform teams will cede day-to-day control to domain squads - marketing, supply chain, risk - who own their slice of the lake “mesh-style.” Instead of submitting Jira tickets for new tables, squads spin up ingestion pipelines with self-service templates, enforce their own quality gates, and publish discoverable “data products” that come with SLAs and documentation baked in. This federated model accelerates agility: subject-matter experts iterate on Agentic use cases without waiting weeks for a platform backlog, while a lightweight platform team focuses on shared tooling, observability, and guardrails rather than line-item work.

Marketplace Economics Drive Reuse

A healthy mesh behaves like an internal app store where data products are ranked by freshness, quality score, and usage stats. Business units pay - either via internal chargeback or budget transfers - only for the products they consume. Popular data products attract more resources; stale ones are deprecated. This marketplace dynamic incentivizes producers to keep schemas crisp, tests green, and metadata rich, because poor quality directly affects “revenue.” Autonomous agents benefit by sourcing higher-grade inputs without bespoke pipelines, turning the mesh into a virtuous circle of supply and demand.

Contract-First Collaboration Reduces Breakage

In a mesh world, teams negotiate machine-readable contracts that specify schema, latency, null tolerances, and deprecation timelines before a single row flows. Tools like OpenAPI for data (ODIF) or GraphQL-based contracts capture these rules and automatically reject incompatible changes. When a supplier wants to add a column or tighten a constraint, a pull request surfaces the diff to all downstream owners for sign-off. This contract-first approach slashes surprise breakages, letting Agentic models retrain on evolving datasets without frantic hot-fixes at midnight.

Policy Automation Scales Governance

Manual approvals don’t work when hundreds of mesh domains deploy code daily. Policy-as-Code engines watch git events, CI results, and metadata changes, auto-tagging sensitive columns, enforcing encryption, and verifying that data leaves protected zones only via approved APIs. If a team tries to publish PII to a public bucket, the pipeline fails before data leaves the firewall. Such automation ensures regulatory compliance even as innovation velocity explodes, giving risk teams confidence to bless rapid experimentation.

Measuring Mesh Value With Product Analytics

Adoption, satisfaction, and ROI dashboards track each data product’s impact: query volumes, derived-model revenue uplift, incident rate, and support tickets. Product managers iterate on documentation, sample notebooks, or caching strategies just as a SaaS team would. By quantifying value at the product level, leadership can retire under-performing feeds, invest in high-impact domains, and prove to the board that the mesh isn’t just architectural hype but a measurable profit engine for Agentic AI.

Vector-Native Lakehouses and the Multimodal Revolution

Embeddings Become a First-Class Data Type

As large language and vision models proliferate, enterprise lake formats will natively store and index high-dimensional vectors the same way they treat integers or strings today. New metadata fields capture source model, training date, and dimensionality, while built-in approximate-nearest-neighbor indexes accelerate similarity searches across billions of vectors. By embedding this capability directly in the storage layer, DevOps teams eliminate the need for specialized vector databases, and Agentic copilots can retrieve context or prior cases in milliseconds without leaving the lake boundary.

Multimodal Tables Collapse Data Silos

Instead of scattering images in object stores, text in tables, and audio in blob fields, upcoming “multimodal tables” will allow mixed columns—binary image blobs, transcribed text, and numeric telemetry - to coexist under a unified schema. Engines like DuckDB or Trino will push down GPU-accelerated codecs to decode media on the fly, letting a single SQL query join visual defects with operator notes and sensor peaks. Agents gain holistic context, merging sight, sound, and stats into richer decisions - think a maintenance bot cross-referencing vibration spikes with facial emotion detection from operator selfies to predict machinery fatigue.

On-Lake RAG (Retrieval-Augmented Generation)

RAG pipelines currently juggle multiple services: an LLM API, a vector store, and a caching layer. The next generation embeds prompt templates, embedding functions, and retrieval logic inside the lakehouse engine. A single SQL call like SELECT rag(question, docs) FROM knowledge_base will fetch relevant passages, craft a prompt, and stream a coherent answer back in sub-second latency. Governance tags follow the data, ensuring proprietary documents never leak, while caching shards reduce token spend. The result: enterprise-grade copilots that run entirely on governed infrastructure, free from vendor lock-in.

GPU Push-Down Shrinks Latency

Columnar GPU databases are already surfacing, but tomorrow’s lake engines will off-load UDFs—vector math, image augmentations, transformer layers—directly to shared GPU buffers during scan time. A query that once loaded 50 GB into Python now executes adjacent to the data, returning only final logits or attention maps. For real-time Agentic decision loops (autonomous factories, adaptive pricing), this cut in data gravity translates to a tangible competitive edge—decisions made in hundreds of milliseconds rather than seconds.

Edge-Aware Replication Fuels Instant Personalization

Vector-rich user profiles need to live close to the action: retail storefronts, 5G base stations, or in-car entertainment units. Intelligent replication policies identify “hot” vectors—recently updated embeddings or top-N high-value customers—and sync them to edge caches with write-behind consistency. Agents running on ARM chips can then serve hyper-personalized recommendations offline, syncing gradients or interaction logs back to the main lake when connectivity permits. This architecture marries cloud-scale training with on-device responsiveness, delivering next-level customer experiences.

Synthetic Realities: Simulation and Synthetic-Data Pipelines

Procedural Generators Combat Data Scarcity

Rare-event prediction - equipment failures, fraud spikes, natural disasters - suffers from class imbalance. Procedural generators use adversarial nets or diffusion models trained on real data to spin up synthetic examples that preserve statistical properties without leaking sensitive details. Each synthetic record carries a “provenance tag” so compliance tools can distinguish it from ground truth. Feeding these balanced datasets into Agentic learners dramatically improves recall on edge cases while safeguarding privacy, bridging the gap between data abundance and regulation.

Scenario-Based Digital Twins Accelerate Policy Testing

Industrial firms are already building physics-based twins, but next-gen twins will incorporate socio-economic layers: demand shocks, regulatory shifts, human behavior loops. Agents run Monte Carlo batches across thousands of scenarios per hour, gauging not only operational KPIs but also ESG scores and brand sentiment. Simulation outcomes flow back to the lake as “counterfactual logs,” enriching training sets with what-if trajectories. The business gains data-driven foresight - like seeing how a hurricane plus supply chain strike would ripple through margins - without risking real assets.

Privacy-Preserving Synthetic Publishing

Healthcare and finance often block data sharing despite massive research interest. Differentially private generative models will allow organizations to publish shareable “synthetic twins” that are statistically faithful yet mathematically proven to resist re-identification. Researchers can validate hypotheses, and Agentic startups can pre-train models, all while the original PII stays sealed. This practice fuels open innovation, expands data marketplaces, and positions compliant organizations as ecosystem leaders rather than data hoarders.

Feedback-Loop Synthesis for Continual Learning

Simulation isn’t one-and-done. Agents will soon request targeted synthetic data when uncertainty spikes in particular state spaces - “generate more night-time pedestrian scenes in rain.” A synthesis service produces new samples, the lake stores them with proper lineage, and the model retrains, reducing epistemic uncertainty. This closed loop creates a self-improving curriculum where synthetic and real data co-evolve, propelling Agentic systems toward ever-broader competence without linear data-collection costs.

Regulatory Sandboxes Become Standard Practice

Governments increasingly require proof that high-stakes AI behaves safely before public rollout. Regulatory sandboxes - secure cloud enclaves pre-approved by watchdogs - host synthetic datasets and simulated environments representative of real populations. Companies submit agents for stress testing under adversarial conditions. Test results, lineage, and mitigation steps are logged back to the lake, providing auditable compliance artifacts. Passing the sandbox fast-tracks market entry, turning regulatory compliance from a blocker into a structured, data-rich milestone.

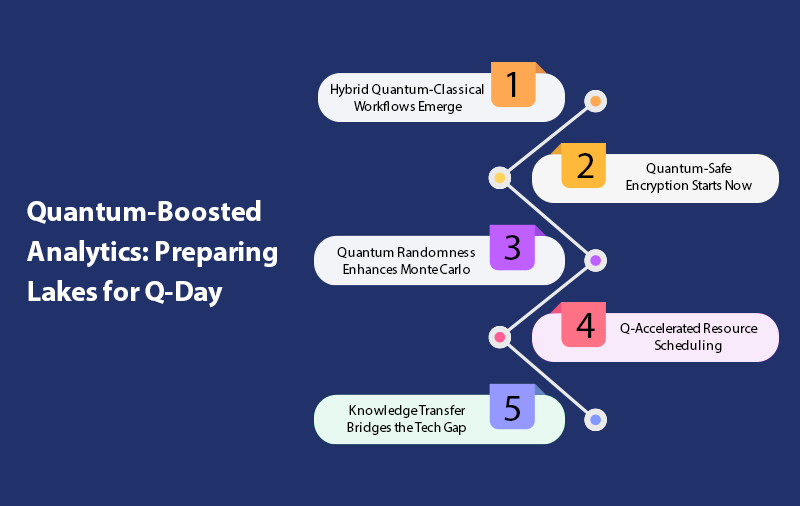

Quantum-Boosted Analytics: Preparing Lakes for Q-Day

Quantum-Boosted Analytics: Preparing Lakes for Q-Day

Quantum processing units (QPUs) won’t replace CPUs overnight, but hybrid orchestration layers will route sub-tasks like combinatorial optimization and large-scale sampling to QPUs while leaving ETL and visualization on classical clusters. A single DAG node might extract features in Spark, batch them into qubit registers, fire a variational algorithm, and write amplitudes back as probability distributions. Early adopters - portfolio optimizers, route planners - already see quality boosts on limited qubit counts, hinting at outsized gains when hardware matures.

Quantum-Safe Encryption Starts Now

The flip side of quantum power is cryptographic threat. Lakes storing decades of sensitive data must begin migrating to post-quantum encryption schemes - lattice-based or hash-based signatures - well before Q-Day. Table formats will embed “encryption version” metadata, enabling gradual re-encryption without downtime. Agents query data through “crypto gateways” that auto-negotiate keys, shielding business secrets from future decryption breakthroughs while maintaining backward compatibility with legacy apps.

Quantum Randomness Enhances Monte Carlo

True quantum randomness yields more reliable tail-risk estimates than pseudo-random generators. Upcoming lake connectors will stream entropy from cloud QPUs into Spark or Pandas jobs, feeding financial VaR simulations, genetic algorithms, or climate models. Better randomness means fewer simulation runs for equivalent confidence, trimming compute costs and letting Agentic strategies converge faster on robust policies.

Q-Accelerated Resource Scheduling

Problems like global logistics routing or power-grid dispatch have exponential solution spaces. Quantum approximate optimization algorithms (QAOA) promise near-optimal answers orders of magnitude faster than classical heuristics. Lakes will house historical demand and constraint matrices; orchestration engines ship them to QPUs nightly, returning schedules agents can tweak in real time. Early field tests show 5–10 % efficiency gains - worth billions at Fortune 500 scale - validating quantum as more than academic curiosity.

Knowledge Transfer Bridges the Tech Gap

Quantum expertise is scarce. “Teacher–student” frameworks will distill insights from quantum solvers into compact classical neural nets that run cheaply at inference time. The lake stores paired datasets - problem instance, quantum optimal solution - allowing supervised learning systems to mimic QPU outputs for everyday use. This approach democratizes quantum benefits, ensuring value even for organizations that can’t afford dedicated hardware 24/7.

Beyond Compliance: Societal Stewardship in an Autonomous Age

AI-Native Legislation Moves From Paper to Code

Policymakers are shifting from static PDFs to machine-readable regulation—think EU AI Act JSON schemas or India’s DPDP YAML manifests. Lakehouse policy engines will ingest these schemas directly, generating enforcement rules that run as part of each query plan. Companies that wire legislation into code workflows cut audit prep from months to minutes, freeing teams to innovate rather than wrestle binders of legal prose.

Collective-Intelligence Coalitions Tackle Grand Challenges

No single enterprise can solve climate risk or pandemic forecasting alone. Federated-learning coalitions—banks, hospitals, utilities—train joint models across private lakes without sharing raw data. Secure multi-party computation reconciles gradients, delivering global insights while preserving sovereignty. These cross-industry agents identify systemic risks (water scarcity, supply-chain fragility) early, enabling coordinated action that transcends corporate boundaries and single-issuer profit motives.

Tokenized Data Marketplaces Reward Quality

To motivate data sharing, decentralized marketplaces will issue crypto tokens or micro-royalties whenever a dataset powers profitable agent decisions. Smart contracts trace lineage automatically, distributing rewards to original contributors - be it a supplier’s IoT feed or a citizen-science weather log. Transparent incentives shift data economics from extraction to partnership, enriching lakes with diverse, high-fidelity signals that continuously sharpen Agentic models.

Human-Agent Decision Rooms Normalize Collaboration

Boardrooms will evolve into “decision rooms” where human experts and AI agents debate options in conversational interfaces. The lake streams live metrics, while agents propose strategies and simulate outcomes. Humans probe assumptions, adjust risk tolerances, or inject ethical constraints, and the agent recalculates on the spot. This iterative dialogue raises decision quality by combining machine breadth with human judgment, creating a governance model that values accountability over blind automation.

Green AI and Carbon-Aware Scheduling

Training giant models can emit tons of CO₂. Carbon-aware schedulers will shift non-urgent compute to data centers powered by renewables or to time slots when grid emissions are low. Lakehouse metadata will tag each job with estimated energy cost; dashboards translate that into carbon and dollar terms. Decision-makers trade off accuracy gains against environmental impact in clear metrics. Such transparency pressures vendors to optimize silicon efficiency and aligns AI innovation with corporate net-zero pledges.

Espire’s Roadmap to Autonomous Advantage

Strategic Lakehouse Blueprinting

Espire begins every engagement with discovery workshops that map business imperatives to data-and-AI capabilities. Our architects translate those insights into a domain-driven lakehouse blueprint—detailing storage tiers, mesh boundaries, metadata fabric, and ingestion topologies—so clients know exactly where and why data should flow before a single cluster spins up. By quantifying latency, quality, and compliance targets in concrete design documents, we eliminate costly rework down the line and give stakeholders a clear ROI narrative that aligns architecture with board-level objectives.

Accelerated AI Factory

Once the foundation is set, our “AI Factory” jump-starts value creation with pre-built MLOps scaffolding: feature stores, vector-native indexes, automated drift sentinels, and CI/CD templates. These modules plug into any cloud or hybrid environment, cutting time-to-model from months to weeks. Espire’s industry use-case accelerators - fraud detection, predictive maintenance, hyper-personalized marketing - let teams deploy vetted reference agents while still customizing features to local data nuances. The result is a repeatable pipeline that turns ideas into production-grade Agentic models at startup speed.

Governance & Trust by Design

Espire bakes governance into every layer, embedding Policy-as-Code rules, lineage capture, and bias audits directly into pipeline templates. Dynamic masking, tokenization, and consent-ledger hooks ensure that even the most advanced retrieval-augmented or reinforcement-learning agents stay within regulatory lines. Our compliance accelerators align with GDPR, DPDP, HIPAA, and forthcoming AI-Safety acts, giving executives the audit trails and explainability artifacts regulators demand - without throttling innovation. Trust moves from paperwork to runtime reality, letting business leaders sleep at night.

FinOps & Sustainability Optimization

We pair technical dashboards with financial telemetry, tagging every GPU-hour and terabyte-month back to a cost center and even a specific model experiment. Auto-scaling policies, spot-instance orchestration, smart tiering, and carbon-aware schedulers keep both budgets and emissions in check. Quarterly “optimization sprints” benchmark spend against industry peers, identifying rightsizing opportunities that typically unlock 25 – 40 % savings - funds clients can reinvest in new AI initiatives. Sustainability metrics appear alongside profit metrics, ensuring ESG goals rise in tandem with revenue.

Continuous Innovation Partnerships

Espire doesn’t disappear after go-live. Our Innovation Lab runs quarterly horizon scans—quantum advances, vector-native breakthroughs, mesh tooling—and prototypes the most promising tech on anonymized client data. Joint war-games test synthetic-data pipelines or digital twins before they’re productionized, while training programs upskill internal teams on the latest responsible-AI patterns. This rolling partnership model keeps clients ahead of the curve, turning Espire into a long-term force multiplier rather than a one-off project vendor.

Conclusion

From domain-driven lake meshes and vector-native storage to quantum-boosted analytics and ethical marketplaces, the next decade will redefine what data lakes and Agentic AI can achieve together. Espire stands ready to guide enterprises through every step - design, build, govern, optimize, and innovate - so they don’t just keep pace with change; they lead it. Ready to future-proof your data strategy and unleash autonomous intelligence at scale? Connect with Espire today and let’s co-create your roadmap to autonomous advantage.