Data Lakes × Agentic AI: Building the Autonomous Enterprise of Tomorrow | Part3

In Part 2 we laid out the architectural blueprint - storage fabrics, metadata fabric, compute orchestration, and real-time ingestion - that turns a raw data lake into a high-performance lakehouse. But a brilliantly designed system is only half the equation; long-term success hinges on how you run it day after day. Part 3 zooms in on the operational disciplines - MLOps, cost governance, responsible-AI guardrails, and continuous-learning loops - that keep Agentic AI accurate, reliable, and business-aligned once the code leaves the whiteboard.

From Commit to Cluster: MLOps That Keep Agents Evolving

GitOps for Models and Pipelines

Treat your entire ML stack - data‐validation scripts, feature-engineering notebooks, training code, infrastructure‐as‐code - as a single, version-controlled repository. A pull request is the start of every change: developers submit code, bots run unit and integration tests, and reviewers sign off just as they would for a microservice.

When the merge button is clicked, a CI server spins up an isolated staging cluster, executes the full training workflow against a representative data slice, and publishes artifacts to a sandbox registry. The same pipeline then promotes the Docker image or conda package to production once all gates pass, guaranteeing the model you trained is exactly the one you serve - no “it worked on my laptop” surprises.

Automated Drift Sentinels

After deployment, models face a moving target: customer behavior, market trends, or sensor calibrations can shift subtly until yesterday’s features no longer describe today’s reality. Drift sentinels run as streaming jobs alongside inference. They compare real-time feature distributions with the statistical fingerprints logged during training - means, variances, categorical frequencies, even embedding‐space centroids.

If the Jensen-Shannon divergence or population-stability index crosses a preset threshold, the sentinel fires a PagerDuty alert, pauses the model (if policy allows), and triggers an automated retraining job using the freshest data. Instead of discovering degradation in next quarter’s KPI review, you detect it while the error budget is still tiny.

Registries, Artifacts, and Human Checkpoints

A centralized model registry is your system of record: each entry bundles the model binary, the exact Git commit, hyper-parameters, data snapshot pointer, evaluation metrics, and intended business objective. Promotion rules are codified as “human checkpoints.” For a fraud model, that might mean sign-off from an ML engineer, a risk analyst, and a compliance officer.

The registry blocks production release until every checkbox is green, giving autonomy a layer of deliberate human oversight without derailing velocity. Audit logs capture who approved what and when, arming you with traceability for regulators or post-incident forensics.

Shadow, Canary, Rollback

Risk mitigation doesn’t stop at code review. In shadow mode, a new agent processes mirrored production traffic but its predictions are logged, not acted upon. Performance dashboards compare the shadow’s outputs to the live model in real time, surfacing unexpected edge cases. If metrics look solid, canary routing sends 1 % of real user calls to the new model while 99 % still use the incumbent.

Any dip in key KPIs - latency, accuracy, conversion - automatically flips traffic back. Because models are containerized and stateless, a rollback is as simple as swapping a Kubernetes tag, turning what once required an emergency war room into a two-minute, no-drama operation.

One Pane of Glass Observability

Logs, metrics, and traces scattered across tools make triage a nightmare. By instrumenting every service with OpenTelemetry, scraping cluster metrics with Prometheus, and forwarding structured logs to Loki or Elasticsearch, you pipe everything into a single Grafana dashboard. There, you can filter by model version, dataset partition, or Kubernetes pod and immediately see GPU utilization, feature-serving latency, and error counts on the same screen.

Correlate a sudden latency spike to a specific feature pipeline stall in three clicks - no more swivel-chair debugging. With alert rules tied to business SLAs, the system notifies you long before users or executives notice something’s off.

Trust Contracts: Data SLAs Your Agents Can Count On

Uptime Promises for Critical Feeds

For an autonomous system, a missing data stream is the digital equivalent of flying blind. Defining an explicit uptime commitment - say 99.9 % for clickstream, payments, or sensor topics - creates a shared, measurable expectation between producer and consumer teams. Real-time monitoring tracks lag, broker health, and partition leader elections, surfacing any breach within seconds. Automated alerts escalate through Slack, SMS, and voice so someone acts before error budgets are exhausted. Over time, these promises build organizational muscle memory: upstream owners engineer redundancy, downstream agents trust their inputs, and business stakeholders experience fewer nasty surprises during peak events.

Latency & Freshness KPIs

Not every workflow needs sub-second updates, but each requires a clear boundary. Fraud detectors might require an end-to-end latency ceiling of 60 seconds, while weekly demand forecasts can tolerate a 15-minute lag. By codifying latency and freshness in service-level objects, teams avoid the trap of “real-time everything,” saving money on overbuilt pipelines that deliver negligible incremental value. Dashboards display current versus target freshness for every dataset, and alerting policies trigger only when the gap threatens to impact model accuracy or regulatory compliance. This disciplined approach aligns engineering effort with business impact and keeps infrastructure costs sensible.

Quality Gates and Error Budgets

A pipeline that ships malformed or duplicate records can silently degrade model performance long before anyone notices. Quality gates act as tripwires: they check null ratios, data-type conformity, referential integrity, and domain-specific rules at each hop. If a violation pushes cumulative error beyond a preset budget - say, 0.5 % duplicates per day - the system halts downstream jobs and opens an incident. This fail-fast philosophy protects the integrity of feature stores and ensures that autonomous agents never train on garbage. Crucially, error budgets give teams room to move fast while maintaining a hard stop against runaway defects.

Who Gets the Pager?

SLOs are worthless if no one is on the hook when they break. Every topic, table, and feature view lists a primary and secondary owner in the metadata catalog. An automated escalation ladder first pings the on-call via Slack; if unacknowledged, it escalates to SMS, then finally voice. Ownership changes sync with HR systems so coverage persists during vacations or turnover. This clear chain of accountability eliminates “not my table” finger-pointing, reduces mean time to resolution, and reassures leadership that someone will always pick up when autonomy falters.

Learning From Incidents

Blameless post-mortems close the loop. After an outage, the involved teams analyze timeline, root cause, and systemic weaknesses. They translate findings into action items - new unit tests, stricter schema contracts, or improved observability dashboards - and track them to completion. Metrics show that organizations practicing disciplined learning reduce recurrence of similar incidents by 60 % or more within a year. Beyond technical fixes, the ritual fosters psychological safety, encouraging engineers to surface near-misses before they blossom into full outages, thereby continuously raising the reliability bar for Agentic AI.

Dollars & Cores: FinOps for Runaway AI

Tag Everything for Chargeback

Cost accountability begins with visibility. Every compute cluster, job, bucket, and even individual model training run should carry metadata tags for team, project, environment, and business objective. Cloud billing exports then resolve spend down to the penny, allowing daily chargeback or show-back reports. When stakeholders see their exact GPU-hour or terabyte-month consumption, budget conversations shift from abstract complaints to concrete trade-offs: “Do we optimize the feature pipeline or accept the extra $5,000 this quarter?” Clear attribution curbs the tragedy of the commons and drives more responsible experimentation.

Scale Down Before It Costs You

Elasticity cannot be an afterthought. Kubernetes Vertical Pod Autoscaler continuously rightsizes container CPU, memory, and even GPU allocations based on historical usage patterns, while serverless Spark kills idle executors in seconds. Combined with time-based or event-based cluster shutdown policies, these mechanisms can slash 30–50 % of wasted capacity. Alerts notify teams when utilization dips below a healthy threshold, prompting code optimization or job rescheduling. By enforcing “scale to zero” as the default, organizations free budget headroom for novel Agentic experiments rather than burning cash on idle silicon.

Spot Instances Without the Sweat

Pre-emptible compute offers discounts of up to 90 %, yet many teams avoid it for fear of losing work. Checkpointing libraries solve this: every few minutes, model state, optimizer parameters, and random seeds flush to the lake. When the spot node disappears, the orchestrator reschedules the task on a fresh instance, which loads the checkpoint and resumes training with minimal disruption. Policy engines decide which jobs qualify - non-urgent backfills or hyper-parameter sweeps - so mission-critical, real-time agents stay on stable nodes. Savings compound quickly, often funding additional GPU clusters within months.

Smart Tiering & Data Diets

Storing everything on premium SSD buckets is financial folly. Lifecycle rules automatically migrate cold partitions to cheaper object tiers like Glacier Instant Retrieval or even tape. Duplicate snapshots and obsolete intermediate files are purged after regulatory retention windows expire. Compression audits ensure columnar formats use optimal codecs, trimming both storage and I/O. Together, tiering and pruning can cut lake footprint by 60 % without sacrificing auditability, accelerating query performance while freeing up budget for innovation projects instead of endless data hoarding.

Forecasting Tomorrow’s Bill

Historical spend is only half the story; growth curves matter. Time-series forecasting models ingest billing exports, planned project timelines, and capacity-scaling rules to predict monthly costs six to twelve months ahead. Finance receives early warnings when projected spend threatens to breach caps, allowing course corrections - job deferrals, refactors, or reserved-instance purchases - before invoices land. This proactive stance turns cloud bills from nasty surprises into manageable line items and helps data teams maintain credibility with finance counterparts.

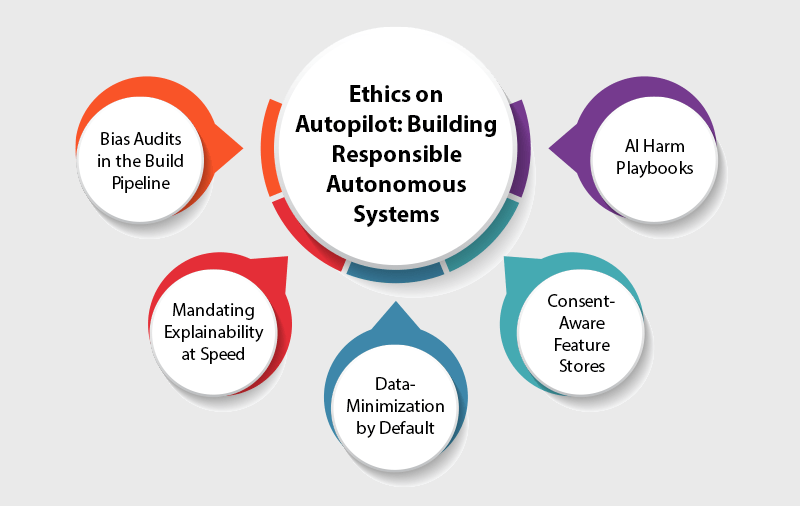

Ethics on Autopilot: Building Responsible Autonomous Systems

Bias Audits in the Build Pipeline

Fairness cannot be a once-a-year audit; it must be CI-driven. During each build, automated tests compute disparate impact, equalized odds, and demographic parity across protected classes like gender, race, or socioeconomic tier. If any metric crosses predefined thresholds - say, a 25 % adverse impact ratio - the build fails and opens a ticket for data rebalancing or feature review. Integrating bias checks alongside accuracy holders developers accountable for ethical outcomes, not just performance, embedding responsible AI into the development DNA.

Mandating Explainability at Speed

Regulations and user trust both demand transparency. Models must produce feature-attribution, counterfactual, or surrogate explanations within a tight latency budget - typically under 200 milliseconds. Techniques such as SHAP value caching, Gradient-based Class Activation Mapping, or model-specific attention visualizations are pre-computed or optimized for GPU execution. An API endpoint returns both the prediction and its rationale, enabling customer-facing apps to display “you were offered this rate because…” disclosures instantly. Fast explainability transforms opacity from a liability into a feature users appreciate and regulators applaud.

Data-Minimization by Default

sensitive data - hashes, age buckets, city-level geo - escalating to finer granularity only if accuracy justifies the privacy cost. Encryption at rest, field-level tokenization, and differential privacy noise protect any unavoidable personal data. Regular privacy impact assessments quantify risk reduction and feed back into design decisions. The result is a leaner data footprint that meets compliance mandates, reduces breach surface area, and demonstrates ethical stewardship to customers.

Consent-Aware Feature Stores

Opt-in and opt-out preferences change daily, especially in regions with strict data-protection laws. A centralized consent ledger records each user’s status and synchronizes with the feature store. Query hooks reference this ledger: if a user revokes consent, guarded fields return masked values or NULL, while aggregates reweight to exclude that individual. Engineering teams avoid brittle conditional logic in model code, and legal teams rest easier knowing dynamic consent compliance is enforced uniformly across all autonomous systems.

AI Harm Playbooks

Even the best safeguards cannot eliminate all risk. Organizations prepare runbooks detailing how to detect, triage, and remediate AI-caused harm - financial loss, safety hazards, reputational damage. Steps include disabling the offending model via feature flag, rolling back to a known-good version, notifying affected users, and conducting a root-cause investigation within 24 hours. Regular drills ensure teams execute flawlessly when real incidents occur. A public post-incident report closes the loop, reinforcing accountability and continuous improvement.

Perpetual Motion: Feedback Loops That Teach Machines to Self-Improve

Active Learning for Unknowns

Autonomous agents often face edge cases their training data never covered. Active-learning wrappers measure prediction confidence and route low-confidence instances to human annotators via integrated labeling tools. Newly labeled data re-enters the training corpus nightly, and the model fine-tunes to cover these blind spots. Over time, annotation volume drops as confidence rises, proving the loop’s efficiency. This targeted approach minimizes labeling costs while continuously expanding the agent’s competence frontier.

Reinforcement Learning from Human Scores

When outcomes are subjective - customer satisfaction, policy relevance - numerical labels fall short. Reinforcement learning from human feedback (RLHF) captures nuanced preferences by letting experts score agent actions. A reward model fits these scores, and the policy network fine-tunes to maximize predicted reward. Periodic human reviews keep the reward model aligned with evolving business goals. This methodology marries quantitative optimization with qualitative judgment, ensuring agents not only perform tasks correctly but do so in ways humans deem valuable.

Digital Twins for Risk-Free Experimentation

Deploying untested strategies in production can be catastrophic. Digital-twin environments simulate supply chains, power grids, or e-commerce ecosystems with near-realistic physics and economic behaviors. Agents test alternative policies - different routing algorithms, surge-pricing curves - collecting performance metrics without real-world fallout. Only configurations that surpass a confidence threshold graduate to live trials. The twin thus becomes a sandbox for innovation, accelerating experimentation cycles while safeguarding customer experience and physical assets.

Federated Learning at the Edge

Data residency laws and privacy concerns often block centralized training. Federated learning lets edge devices - phones, drones, retail kiosks - train local model copies on sensitive data, then send encrypted gradient updates to a central aggregator in the lake. Aggregation produces a global model without ever moving raw data off-device. Secure aggregation and differential privacy further obscure individual contributions, satisfying regulatory requirements while still harnessing diverse, real-world data for better generalization.

Graduation, Sunsetting, Fleet Health

A fleet of agents can’t evolve haphazardly. Lifecycle management dashboards track KPIs - accuracy, latency, cost, ethical compliance - for every model version. Graduation criteria promote beta agents to production once they outperform incumbents on statistically significant samples. Conversely, models that underperform or exceed cost budgets are sunset, freeing resources. Fleet-level health scores summarize overall performance, guiding leadership on where to invest next. Structured lifecycle governance keeps innovation orderly and prevents an unmanageable zoo of half-supported models.

Conclusion

Operational excellence is the flywheel that converts a beautifully architected lakehouse into sustained competitive advantage. When MLOps rigor, contractual trust, cost discipline, ethical guardrails, and continuous-learning loops work in concert, Agentic AI becomes a reliable partner - not a risky science project.

Ready to embed these best practices into your own data and AI stack? Connect with Espire’s expert team today, and let’s operationalize your autonomous future - securely, responsibly, and at scale.